A Short Collection of Resources on AI Energy & Water Consumption

February 2025

This is a list of resources that I developed as I considered the potential role of AI in early childhood education. The notes under each source are my own callouts for that particular focus; they are not summaries and do not reflect the rich array of additional information and resources covered in each article or report.

The list is not even remotely comprehensive, and it is intentionally limited to mainstream reporting rather than academic research journals. This is to underscore that the information is readily available to the general public and also to provide sources that are at an accessible reading level for high school students (and some middle schoolers).

I’ve particularly looked for articles that report on the things that industry leaders are saying to one another or to investors, which means that the list doesn’t include the work of many excellent scholars who have written important books and articles critical of AI (e.g., Emily Bender, Timnit Gebru, Damien P. Williams, or Gary Marcus). Article bylines are included in the text so educators can easily search and find journalists who are reporting on AI and climate issues.

WATER CONSUMPTION

How much energy can AI use? Breaking down the toll of each ChatGPT query – The Washington Post

by Pranshu Verma and Shelly Tan 18 September 2024

A single 100-word email generated by an AI chatbot using GPT-4 requires 519 milliliters of water, a little more than one 16 oz. bottle.

Generative AI’s environmental costs are soaring — and mostly secret

by Kate Crawford 20 February 2024

Generative AI systems need enormous amounts of fresh water to cool their processors and generate electricity. In West Des Moines, Iowa, a giant data-centre cluster serves OpenAI’s most advanced model, GPT-4. A lawsuit by local residents revealed that in July 2022, the month before OpenAI finished training the model, the cluster used about 6% of the district’s water.

As Google and Microsoft prepared their Bard and Bing large language models (LLMs), both had major spikes in water use — increases of 20% and 34%, respectively, in one year, according to the companies’ environmental reports. One preprint suggests that, globally, the demand for water for AI could be half that of the United Kingdom by 2027.

You’ll Be Astonished How Much Power It Takes to Generate a Single AI Image

by Victor Tangermann 5 December 2023

According to Google’s 2023 Environmental Report, the company used 5.6 billion gallons of water last year, a 20 percent increase over its 2021 usage.

AI doesn’t just require tons of electric power. It also guzzles enormous sums of water. | Fortune

By Jane Thier and Fortune Editors 9 January 2025

To send one email per week for a year, ChatGPT uses up 27 liters of water.

Generative AI and Climate Change Are on a Collision Course | WIRED

By Sasha Luccioni 18 December 2024

Data centers are slurping up huge amounts of freshwater from scarce aquifers, pitting local communities against data center providers in places ranging from Arizona to Spain. In Taiwan, the government chose to allocate precious water resources to chip manufacturing facilities to stay ahead of the rising demands instead of letting local farmers use it for watering their crops amid the worst drought the country has seen in more than a century.

Elon Musk’s xAI supercomputer stirs turmoil over smog in Memphis : NPR

By Dara Kerr 11 September 2024

When the supercomputer gets to full capacity, the local utility says it’s going to need a million gallons of water per day…

ENERGY CONSUMPTION

AI already uses as much energy as a small country. It’s only the beginning. | Vox

By Brian Calvert 28 March 2024

According to the IEA, a single [standard] Google search takes 0.3 watt-hours of electricity, while a ChatGPT request takes 2.9 watt-hours. Researcher Sasha Luccioni (interviewed by Brian Calvert Mar 28, 2024) said, “switching from a nongenerative, good “old-fashioned” AI approach to a generative one can use 30 to 40 times more energy for the exact same task.”

To keep an AI up-to-date you have to keep training it, which means it continues to grow (demanding more server space) and, therefore, draws increasingly more energy in exponential amounts.

Generative AI’s environmental costs are soaring — and mostly secret

By Kate Crawford 20 February 2024

In January 2024, OpenAI CEO Sam Altman told the World Economic Forum’s annual meeting in Davos, Switzerland that the next wave of generative AI systems will consume vastly more power than expected, and that energy systems will struggle to cope. (He’s relying on a breakthrough in nuclear fusion to come to the rescue).

Within years, large AI systems are likely to need as much energy as entire nations.

ChatGPT is already consuming the energy of 33,000 homes. It’s estimated that a search driven by generative AI uses four to five times the energy of a conventional web search.

https://www.washington.edu/news/2023/07/27/how-much-energy-does-chatgpt-use/

Sajjad Moazeni, a University of Washington assistant professor of electrical and computer engineering, concluded that, “Just training a chatbot can use as much electricity as a neighborhood consumes in a year.”

AI is poised to drive 160% increase in data center power demand | Goldman Sachs

Goldman Sachs research 14 May 2024

Between 2022 and 2030, the demand for power will rise roughly 2.4%…around 0.9 percent points of that figure will be tied to data centers.

Data centers will use 8% of US power by 2030, compared with 3% in 2022.

“the overall increase in data center power consumption from AI [will] be on the order of 200 terawatt-hours per year between 2023 and 2030. By 2028, our analysts expect AI to represent about 19% of data center power demand.”

A Computer Scientist Breaks Down Generative AI’s Hefty Carbon Footprint | Scientific American

By Kate Saenko & The Conversation US 25 May 2023

In 2019, researchers found that creating a generative AI model called BERT with 110 million parameters consumed the energy of a round-trip transcontinental flight for one person. The number of parameters refers to the size of the model, with larger models generally being more skilled. Researchers estimated that creating the much larger GPT-3, which has 175 billion parameters, consumed 1,287 megawatt hours of electricity and generated 552 tons of carbon dioxide equivalent, the equivalent of 123 gasoline-powered passenger vehicles driven for one year. And that’s just for getting the model ready to launch, before any consumers start using it. According to the latest available data, ChatGPT had over 1.5 billion visits in March 2023.

Elon Musk’s xAI supercomputer stirs turmoil over smog in Memphis : NPR

By Dara Kerr 11 September 2024

When the supercomputer gets to full capacity, the local utility says it’s going to need a million gallons of water per day and 150 megawatts of electricity — enough to power 100,000 homes per year.

You’ll Be Astonished How Much Power It Takes to Generate a Single AI Image

by Victor Tangermann 5 December 2023

Stable Diffusion’s open source XL used almost as much power per image as that required to fully charge a smartphone.

Taking a closer look at AI’s supposed energy apocalypse – Ars Technica

By Kyle Orland 25 June 2024

You’d have to watch 1,625,000 hours of Netflix to consume the same amount of power it takes to train GPT-3.

AI Is a Humongous Electricity Hog, and the Environment Can Benefit – Bloomberg

Editorial Board 16 April 2024

“Next time you ask ChatGPT for a lasagna recipe, consider how much computing power you’re using: On a typical day, the AI chatbot handles an estimated 195 million queries, consuming enough electricity to supply some 23,000 US households. By 2026, booming AI adoption is expected to help drive a near-doubling of data centers’ global energy use, to more than 800 terawatt-hours — the annual carbon-emission equivalent of about 80 million gasoline-powered cars.”

Generative AI and Climate Change Are on a Collision Course | WIRED

By Sasha Luccioni 18 December 2024

In 2024, “Microsoft and Google, two of the leading big tech companies investing heavily in AI research and development, missed their climate targets.”

Data centers already use 2 percent of electricity globally. In countries like Ireland, that figure goes up to one-fifth of the electricity generated, which prompted the Irish government to declare an effective moratorium on new data centers until 2028.

Places like Data Center Alley’ in Virginia are mostly powered by nonrenewable energy sources such as natural gas, and energy providers are delaying the retirement of coal power plants to keep up with the increased demands of technologies like AI.

My (Luccioni’s) latest research shows that switching from older standard AI models—trained to do a single task such as question-answering—to the new generative models can use up to 30 times more energy just for answering the exact same set of questions.

AI NEEDS SO MUCH POWER, IT’S MAKING YOURS WORSE

By Leonardo Nicoletti, Naureen Malik, Andre Tartar 27 December, 2024 Bloomberg Technology

AI data centers are multiplying across the US and sucking up huge amounts of power. New evidence shows they may also be distorting the normal flow of electricity for millions of Americans… more than three-quarters of highly-distorted power readings across the country are within 50 miles of significant data center activity.

Every day, Americans reach into their refrigerators or turn on their dishwashers without much thought given to the electricity flowing through their homes. But a hidden problem – distorted power supplies – now threatens mundane tasks like running a dishwasher or relying on a refrigerator. “The term for the issue is “bad harmonics.” It may seem a bit esoteric, but you can think of it like the static that can be heard when a speaker’s volume is jacked up higher than it can handle. Electricity travels across high-voltage lines in waves, and when those wave patterns deviate from what’s considered ideal, it distorts the power that flows into homes. Bad harmonics can force home electronics to run hot, or even cause the motors in refrigerators and air conditioners to rattle. It’s an issue that can add up to billions of dollars in total damage.”

The worse power quality gets, the more the risk increases. Sudden surges or sags in electrical supplies can lead to sparks and even home fires.

Amid Arizona’s data center boom, many Native Americans live without power – The Washington Post

Pranshu Verma 23 December 2024

A fierce battle for electric power is being waged across the nation, and Nez is one of thousands of people who have wound up on the losing end. Amid a boom in data centers, the energy-intensive warehouses that run supercomputers for Big Tech companies, Arizona is racing to increase electricity production. In February, the state utility board approved an 8 percent rate hike to bolster power infrastructure throughout the state, where data centers are popping up faster than almost anywhere in the United States. But it rejected a plan to bring electricity to parts of the Navajo Nation land, concluding that electric customers should not be asked to foot the nearly $4 million bill.

By Marc Levy 25 January 2025

Looking for a quick fix for their fast-growing electricity diets, tech giants are increasingly looking to strike deals with power plant owners to plug in directly, avoiding a potentially longer and more expensive process of hooking into a fraying electric grid that serves everyone else.

It’s raising questions over whether diverting power to higher-paying customers will leave enough for others and whether it’s fair to excuse big power users from paying for the grid.

The arrangement between the Susquehanna nuclear power plant in Berwick PA and AWS — called a “behind the meter” connection — is the first such to come before the Federal Energy Regulatory Commission. For now, FERC has rejected a deal that could eventually send 960 megawatts — about 40% of the plant’s capacity — to the data center. That’s enough to power more than a half-million homes…

Federal officials say fast development of data centers is vital to the economy and national security, including to keep pace with China in the artificial intelligence race.

In theory, the AWS deal would let Susquehanna sell power for more than they get by selling into the grid. Talen Energy, Susquehanna’s majority owner, projected the deal would bring as much as $140 million in electricity sales in 2028, though it didn’t disclose exactly how much AWS will pay for the power.

AI Power Needs Threaten Billions in Damages for US Households

By Leonardo Nicoletti, Naureen Malik, & Andre Tartar at Bloomberg Technology 27 Dec 2024

AI needs so much power, it’s making yours worse. New evidence shows that data centers can distort the normal flow of electricity for millions of Americans in ways that can burn out household appliances, cause short circuits, and in a few cases, start fires. Industry calls this “bad harmonics.” Think of like the buzzing or static that can distort the sound of speakers when the volume is cranked too high.

“The problem is threatening billions in damage to home appliances and aging power equipment, especially in areas like Chicago and “data center alley” in Northern Virginia, where distorted power readings are above recommended levels. A Bloomberg analysis shows that more than three-quarters of highly-distorted power readings across the country are within 50 miles of significant data center activity.”

AI Leaders Gather in Saudi Arabia to Talk Energy – Bloomberg

Marissa Newman reporting from “Davos in the Desert”, FII gathering in Riyadh 30 Oct 2024

“some of the guests at the glitzy confab are also drawing attention to the potential Achilles heel of the enterprise: the energy-hungry data centers that underpin AI are already straining electricity grids around the world.

Schwarzman of Blackstone, which is building a $25 billion data center empire, estimated that the AI boom could potentially propel electricity use to soar 40% in the next decade. He warned that could slow the development of the technologies, quite aside from its disruption to global economies.

“In four years, giant economies, at the rate that expansion is going to happen, are going to stock out of electricity unless there’s either efficiencies in semiconductors and other types of things or you’re going to have to slow down the growth or have a giant expansion,” he said.

Some tech giants like Microsoft Corp., Amazon.com Inc. and Google are exploring nuclear energy to power their AI projects. Musk, whose autonomous cars and AI chatbot businesses require huge amounts of energy, signaled he was looking at other sources.”

Also useful:

Consultation paper on AI regulation: emerging approaches across the world – UNESCO Digital Library

This post is an appendix to “It’s Time to Pause Use of AI in Early Childhood Education: A Provocation” on LinkedIn.

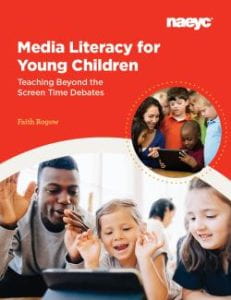

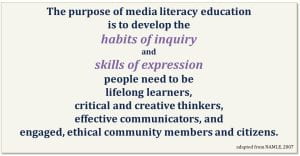

So what happens if we change the paradigm? What if the launch pad for technology integration is instead the question, “How do we help children become literate in a digital world?” Now, rather than focusing on counting minutes of screen time, we get a rich array of activities and interactions that help children develop the “habits of inquiry and skills of expression necessary for people to be critical thinkers, thoughtful and effective communicators, and informed and responsible members of society,” as the National Association for Media Literacy Education puts it in its

So what happens if we change the paradigm? What if the launch pad for technology integration is instead the question, “How do we help children become literate in a digital world?” Now, rather than focusing on counting minutes of screen time, we get a rich array of activities and interactions that help children develop the “habits of inquiry and skills of expression necessary for people to be critical thinkers, thoughtful and effective communicators, and informed and responsible members of society,” as the National Association for Media Literacy Education puts it in its

Gerhard Trumler

Gerhard Trumler

“Media literacy analysis isn’t about showing children what they missed by pointing out what we notice. It’s about asking them what they notice and helping them build the skills they need to see more.”

“Media literacy analysis isn’t about showing children what they missed by pointing out what we notice. It’s about asking them what they notice and helping them build the skills they need to see more.”

Many hiking trails and parks include a multitude of signs: no motorized vehicles on trails; dogs welcome (or forbidden); historical plaques; park logos; trail maps; explanation panels of natural features; and even hiker-created trail markers. You could guide children in analyzing any or all of these and ask questions like: What does that sign mean? How do you know? Why do you think they made a special marker to help us notice this rock formation? What colors do they use on the signs and why do you think they chose those colors? Why do you think the park logo includes a tree?

Many hiking trails and parks include a multitude of signs: no motorized vehicles on trails; dogs welcome (or forbidden); historical plaques; park logos; trail maps; explanation panels of natural features; and even hiker-created trail markers. You could guide children in analyzing any or all of these and ask questions like: What does that sign mean? How do you know? Why do you think they made a special marker to help us notice this rock formation? What colors do they use on the signs and why do you think they chose those colors? Why do you think the park logo includes a tree?

Then give a prompt (connected to curricular goals) that provides an opportunity to decide when it would be helpful to use a macro or close-up and when a wider shot might be a better choice. For example, you might build on lessons about butterfly habitat:

Then give a prompt (connected to curricular goals) that provides an opportunity to decide when it would be helpful to use a macro or close-up and when a wider shot might be a better choice. For example, you might build on lessons about butterfly habitat: And if you’re skeptical about young children’s abilities to impact their communities, take a lesson from second graders at Caroline Elementary School. As part of a science curriculum that integrates media literacy, they made a

And if you’re skeptical about young children’s abilities to impact their communities, take a lesson from second graders at Caroline Elementary School. As part of a science curriculum that integrates media literacy, they made a  Everyone teaches the seasons. How about helping children analyze common depictions of seasons (e.g., posters at schools, holiday cards, store signs and decorations) and compare those media to their actual lives?

Everyone teaches the seasons. How about helping children analyze common depictions of seasons (e.g., posters at schools, holiday cards, store signs and decorations) and compare those media to their actual lives? To help children compare the poster’s messages about seasons to what really happens where they live, have them take a picture of the same thing(s) outdoors over the course of a school year and note the changes over time. Ask children to determine what they should photograph. What in their environment changes and what stays the same? You might want to invite them to photograph different types of plants to see which change a lot and which seem not to change at all. That might spark an investigation of the difference between, say, deciduous trees and evergreens. This could be especially effective if your poster of the seasons uses a changing tree to symbolize the four seasons.

To help children compare the poster’s messages about seasons to what really happens where they live, have them take a picture of the same thing(s) outdoors over the course of a school year and note the changes over time. Ask children to determine what they should photograph. What in their environment changes and what stays the same? You might want to invite them to photograph different types of plants to see which change a lot and which seem not to change at all. That might spark an investigation of the difference between, say, deciduous trees and evergreens. This could be especially effective if your poster of the seasons uses a changing tree to symbolize the four seasons. When weather precludes time outdoors, turn attention to analyzing media messages about nature, ecology, or sustainability. Help children use media literacy questions to examine their books, apps, movies, or games. Focusing on media they already know works best. Model asking questions like:

When weather precludes time outdoors, turn attention to analyzing media messages about nature, ecology, or sustainability. Help children use media literacy questions to examine their books, apps, movies, or games. Focusing on media they already know works best. Model asking questions like: You might also help children look at food packaging. Identify food packaging as a form of advertising, which is a form of communication. What’s the package saying to us? Are there clues that children can spot that tell them which packages contain foods that are from plants, trees, vines, or bushes? Which just pretend that real fruit or vegetables are inside? Hint: Teach children to look for the word “flavored.” Even if the word is different in their home language, the packages they are likely to encounter in the U.S. will use the English word, so teach the word in both languages. Pre-readers can look for the first couple of letters, “F” and “L.” When the word “flavored” is accompanied by pictures of real fruit or vegetables it means that the package is almost certainly wearing a disguise. Real foods don’t need fancy or disguised packages to tempt us – they’re delicious all by themselves!

You might also help children look at food packaging. Identify food packaging as a form of advertising, which is a form of communication. What’s the package saying to us? Are there clues that children can spot that tell them which packages contain foods that are from plants, trees, vines, or bushes? Which just pretend that real fruit or vegetables are inside? Hint: Teach children to look for the word “flavored.” Even if the word is different in their home language, the packages they are likely to encounter in the U.S. will use the English word, so teach the word in both languages. Pre-readers can look for the first couple of letters, “F” and “L.” When the word “flavored” is accompanied by pictures of real fruit or vegetables it means that the package is almost certainly wearing a disguise. Real foods don’t need fancy or disguised packages to tempt us – they’re delicious all by themselves! BTW, If you want a great example of how kindergartners compared websites to learn how best to grow tomato plants, check out Vivian Vasquez’s & Carol Felderman’s brilliant book,

BTW, If you want a great example of how kindergartners compared websites to learn how best to grow tomato plants, check out Vivian Vasquez’s & Carol Felderman’s brilliant book,